Explore the significance of maintaining up-to-date data, the challenges involved, and how our solutions can ensure your data remains fresh to meet SLAs.

Introduction

Have you ever experienced delays in delivering tables that or machine learning (ML) models that directly power customer experiences due to stale data? Ensuring timely data is crucial for maintaining the effectiveness and reliability of these mission-critical products. In this post, we'll explore the importance of data freshness, the challenges associated with it, and how DataHub can help you meet your data freshness SLAs consistently.

What is Data Freshness?

Data freshness refers to the timeliness and completeness of data used to build tables and ML models. Specifically, freshness can be measured by the difference in time between when some event actually occurs vs when that record of that event is reflected in a dataset or used to train an AI model.

To make things concrete, let’s imagine you run an e-commerce business selling t-shirts. When a user clicks the final “purchase” button to finalize a purchase, this interaction is recorded, eventually winding up in a consolidated “click_events” table on your data warehouse. Data freshness in this case could be measured by comparing when the actual click was performed against when the record of the click landed in the data warehouse. In reality, freshness can be measured against any reference point - e.g. event time, ingestion time, or something else - in relation to when a target table, model, or other data product is updated with new data.

Data Freshness

Oftentimes, data pipelines are designed in order meet some well-defined availability latency, or data freshness SLA, with the specifics of this type of agreement dictating how and when the data pipeline is triggered to run.

In the modern data landscape, ensuring that data is up-to-date is vital for building high-quality data products, from reporting dashboards used to drive day-to-day company decisions to personalized and dynamic data- or AI-powered product experiences.

Why Data Freshness Matters

For many organizations, fresh data is more than a ‘nice to have’.

Mission-critical ML models, like those used for price prediction or fraud detection, depend heavily on fresh data to make accurate predictions. Delays in updating these models can lead to lost revenue and damage to your company's reputation.

Customer-facing data products, for example recommendation features, also need timely updates to ensure that customers receive the most recent and relevant information personalized to them. Delays in data freshness can result in customer frustration, user churn, and loss of trust.

Key Considerations for Your Organization

Critical Data and ML Models:

Can you recall examples when your organization faced challenges in maintaining the timeliness of mission-critical datasets and ML models? If your organization relies on data to deliver concrete product experiences, compliance auditing, or for making high-quality day-to-day decision, then stale data can significantly impact revenue and customer satisfaction. Consider identifying which datasets and models are most critical to your operations and quantifying the business impact of delays.

Impact Identification and Response:

Because data is highly interconnected, delays in data freshness can lead to cascading problems, particularly of your organization lacks a robust system for identifying and resolving such problems. How does your organization prioritize and manage such incidents? Processes for quickly identifying and resolving root causes are essential for minimizing negative impacts on revenue and reputation.

Automated Freshness Monitoring:

If data freshness problems often go undetected for long periods of time, there may be opportunities to automate the detection of such problems for core tables and AI models so that your team is first to know when something goes wrong.

How to Ensure Data Freshness

Ensuring data freshness involves several best practices and strategies. Here’s how you can achieve it:

Best Practices and Strategies

Data Lineage Tracking:

Utilize data lineage tracking to establish a bird’s eye view of data flowing through your systems - a picture of the supply chain of data within your organization. This helps in pinpointing hotspots where delays occur and understanding the full impact of such delays to coordinate an effective response.

Automation and Monitoring:

Implement automated freshness monitoring to detect and address issues promptly. This reduces the need for manual debugging and allows for quicker response times. It can also help you to establish peace-of-mind by targeting your most impactful assets.

Incident Management:

Establish clear protocols for incident management to prioritize and resolve data freshness issues effectively. This includes setting up notifications and alerts for timely intervention, and a broader communication strategy to involve all stakeholders (even those downstream) in the case of an issue.

Alternatives

While manual investigation and communication using tools like Slack can help triage issues, they often result in time-consuming, inefficient, and informal processes for addressing data quality issues related to freshness, ultimately leading to lower quality outcomes. Automated freshness incident detection and structured incident management via dedicated data monitoring tools can help improve the situation by providing a single place for detecting, communicating, and coordinating to resolve data freshness issues.

How DataHub Can Help

DataHub offers comprehensive features designed to tackle data freshness challenges:

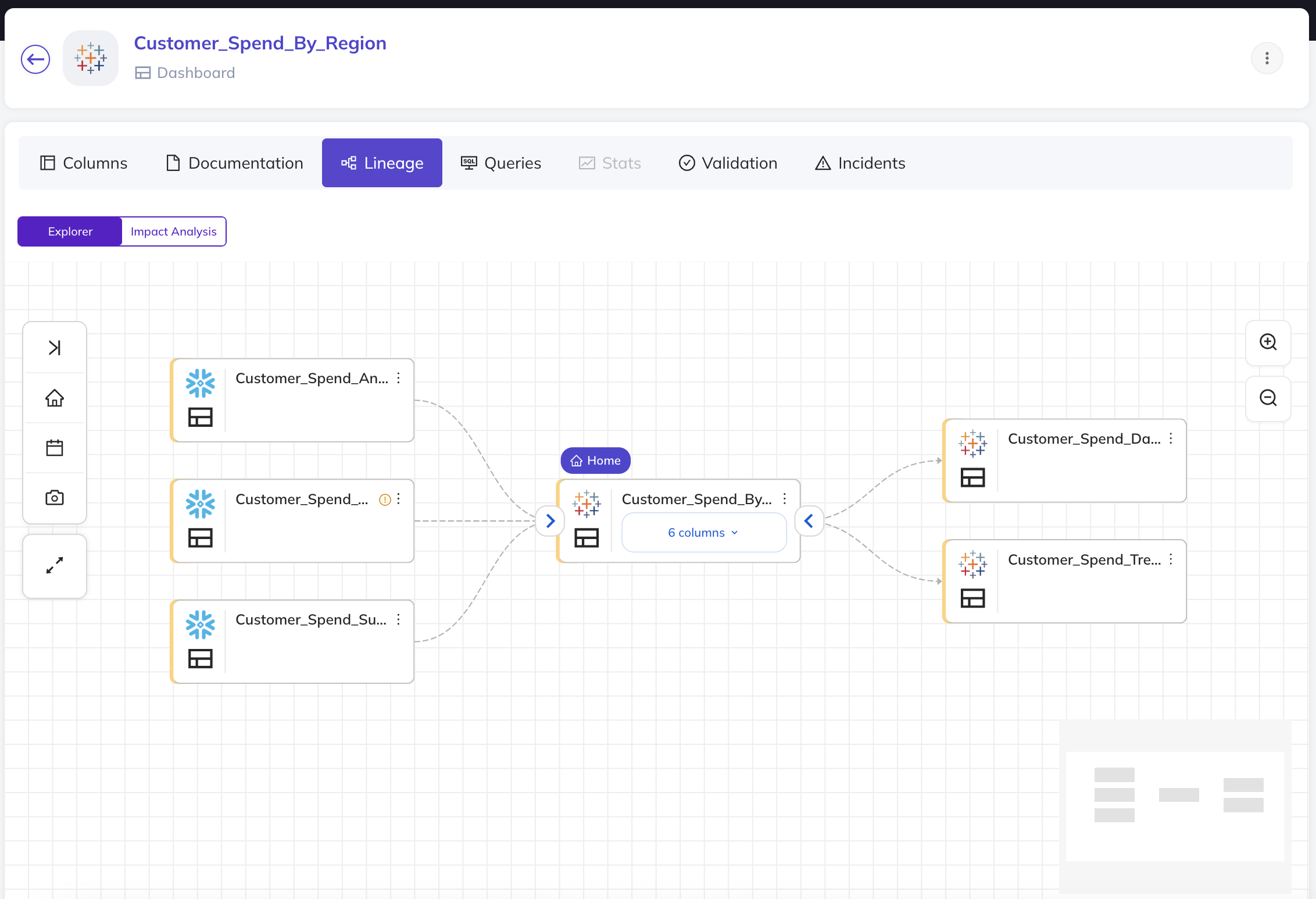

End-To-End Data Lineage and Impact Analysis: Easily track the flow of data through your organization to identify, debug, and resolve delays quickly.

Data Lineage

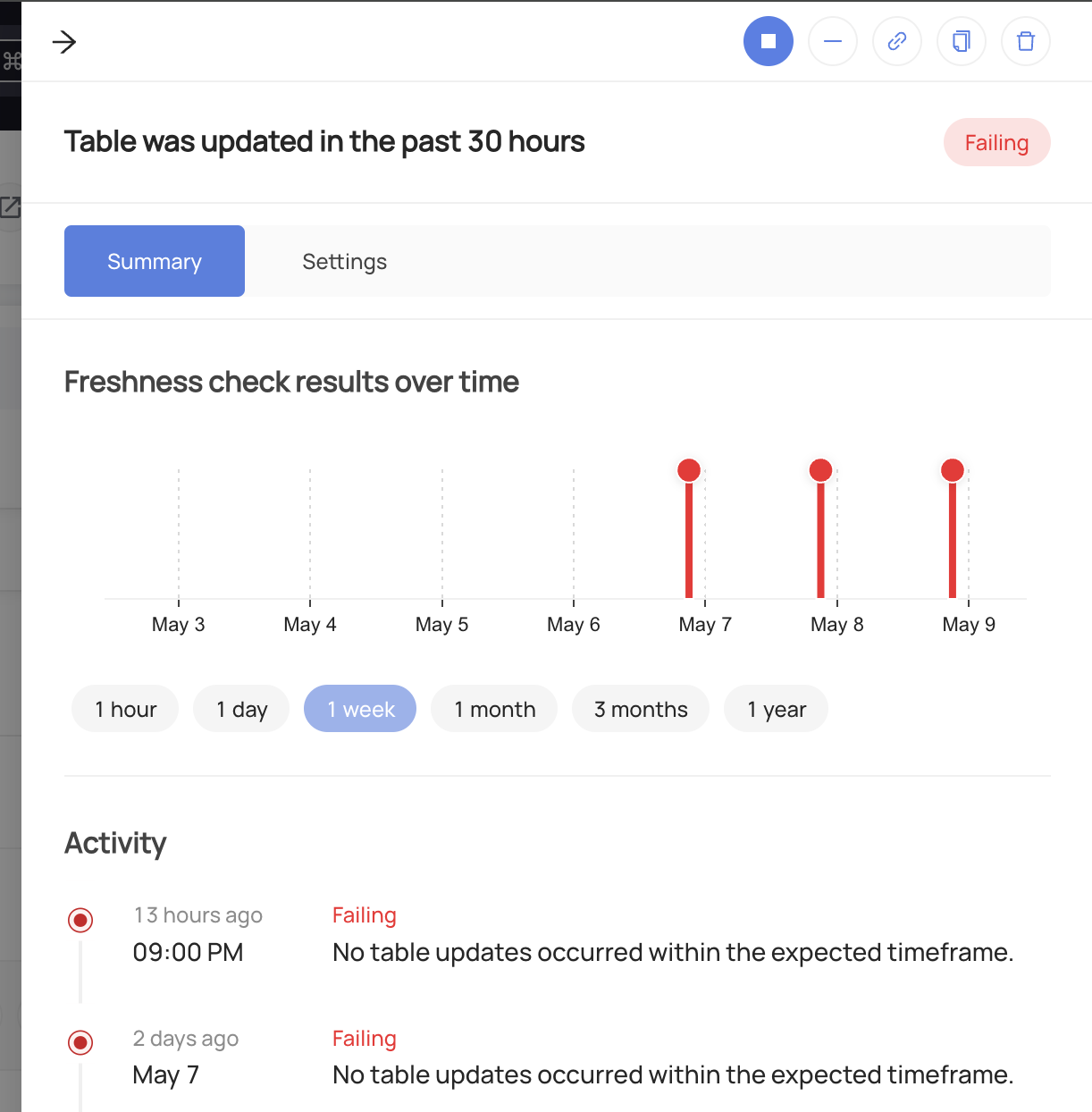

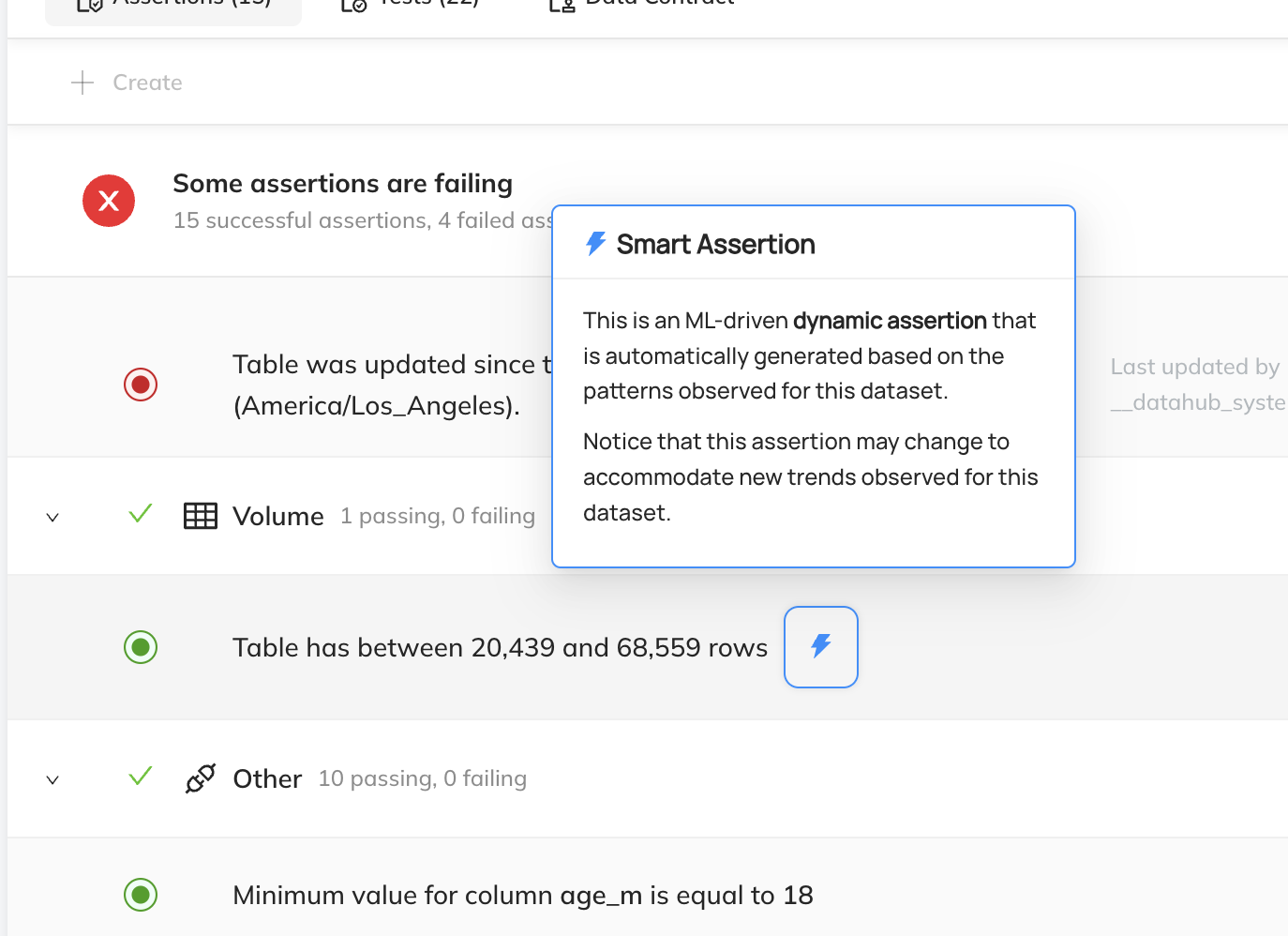

Freshness Monitoring & Alerting: Automatically detect and alert when data freshness issues occur, to ensure timely updates by proactively monitoring key datasets for updates. Check out Assertions and Freshness Assertions, Available in Acryl Managed DataHub Only.

Freshness Assertions Results

Smart assertions checks for changes on a cadence based on the Table history, by default using the Audit Log.

Incident Management : Centralize data incident management and begin to effectively triage, prioritize, communicate and resolve data freshness issues to all relevant stakeholders. Check out subscription & notification features as well.

By implementing these solutions, you can ensure that your key datasets and models are always up-to-date, maintaining their relevancy, accuracy, and reliability for critical use cases within your organization.

Conclusion

Ensuring data freshness is essential for the performance and reliability of critical datasets and AI/ML models. By understanding the importance of data freshness and implementing best practices and automated solutions, you can effectively manage and mitigate delays, thereby protecting your revenue and reputation. DataHub is designed to help you achieve this, providing the tools and features necessary to keep your data fresh and your operations running smoothly.